| |

|

|

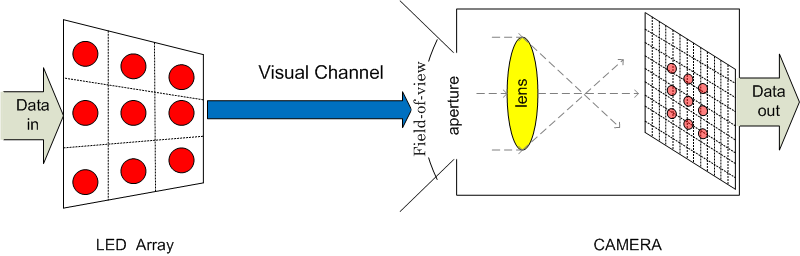

Visual Channel Models

Visual channels are highly characterized by visual

distortions unlike RF MIMO channels characterized by multipath and fading. We model a visual MIMO channel

subject to perspective distortions and other artifacts due lens-blur and spatial interference from

multiple light emitters, and synchronization mismatch at the optical-array receiver. We borrow from computer vision theory and also propose techniques

that apply to camera-based communication channels in general.

Links: Mobicom'10 [paper], [Rutgers Engineering Week Poster],

CISS'11 [paper], ProCam'11 [paper]

|

| |

|

|

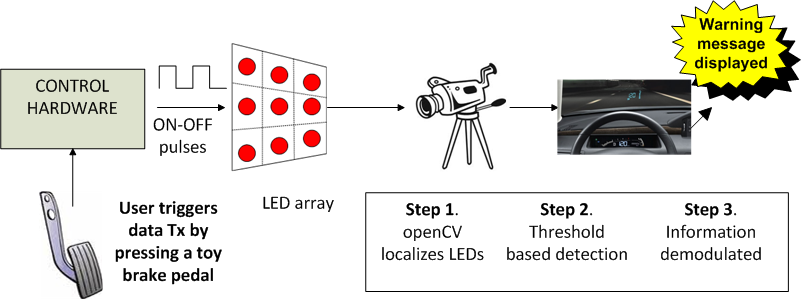

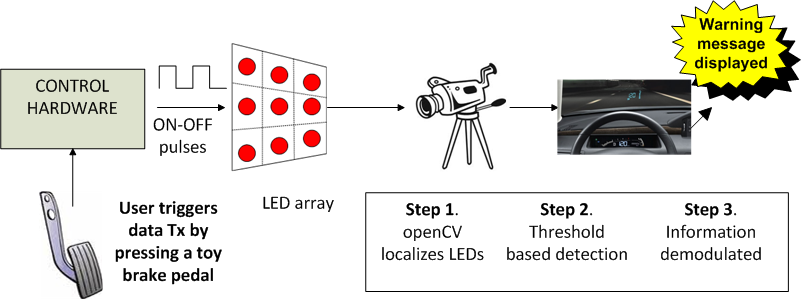

LED-to-Camera Communication for Car-2-Car Communication

The inherent limitations in RF spectrum availability and susceptibility to interference make it difficult to

meet the reliability required for automotive safety applications. Visual MIMO applied to vehicular communication proposes

to reuse existing LED rear and headlights as transmitters and existing cameras

(e.g. those used for parking assistance, rear-view cameras) as receivers.

We designed a proof of concept prototype of a visual MIMO system

consisting of an LED transmitter array and a high-speed camera. We also propose link layer techniques

such as rate-adaptation that apply to such systems for adapting to visual channel distortions.

Links: Mobisys'11 [paper][WINLAB Poster], SECON'11 [paper][slides]

|

| |

|

|

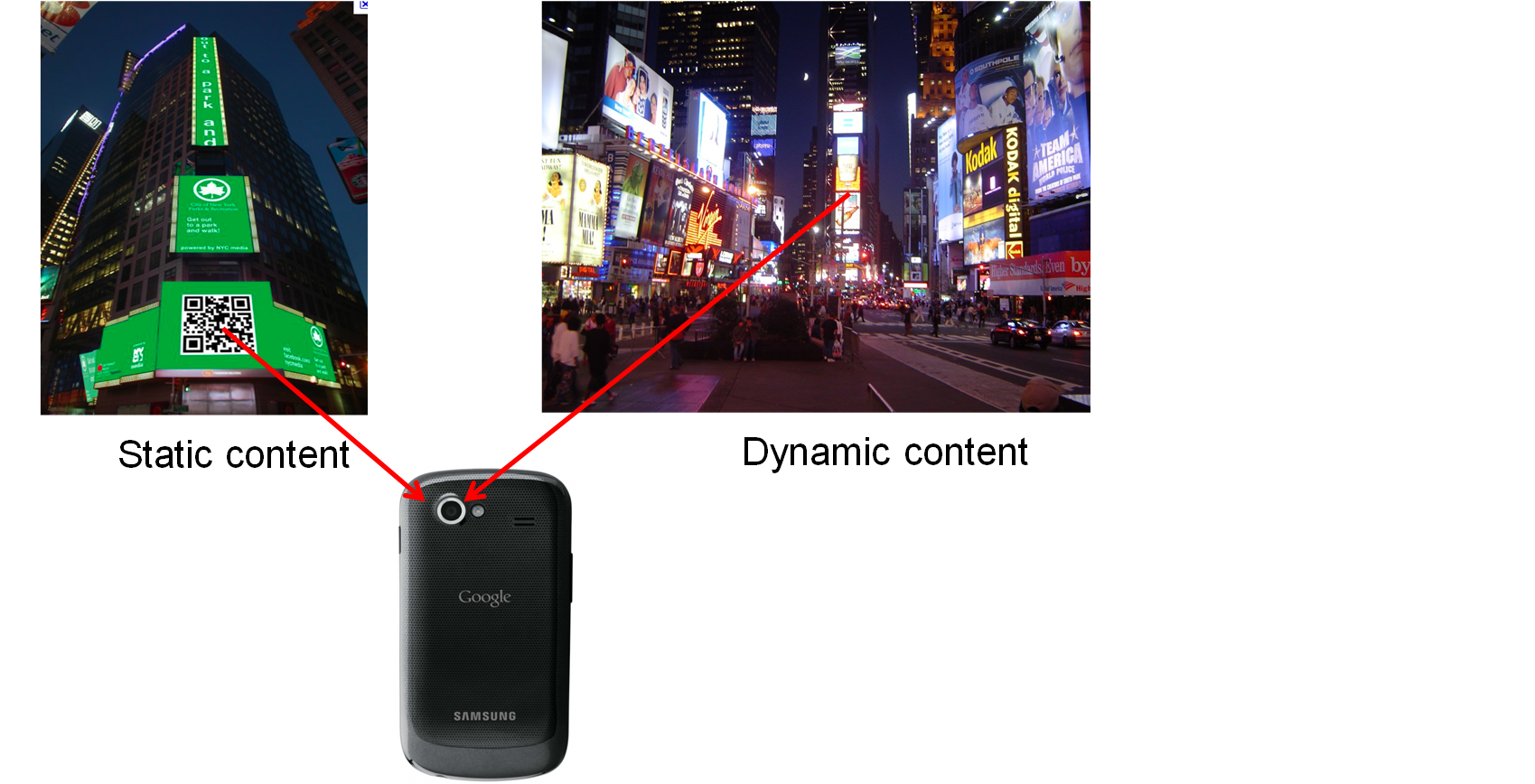

Display screens-to-Camera Communication

The ubiquitous use of QR codes motivates to build novel camera communication applications where

cameras decode information from pervasive display screens such as billboards, TVs, monitor screens, etc.

We are studying and exploring methods, inspired from communication and computer vision techniques, to communicate from display screens to

off-the-shelf camera devices.

Links: WACV'12 [paper], CVPR'12 [poster],

IEEE GlobalSIP [accepted manuscript]

|

| |

|

|

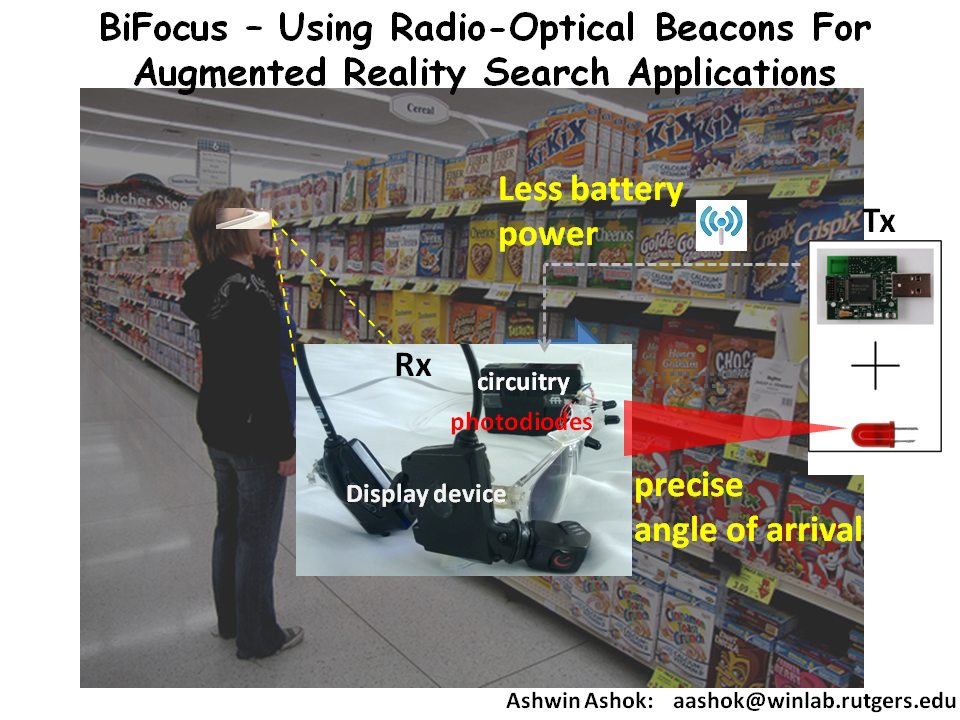

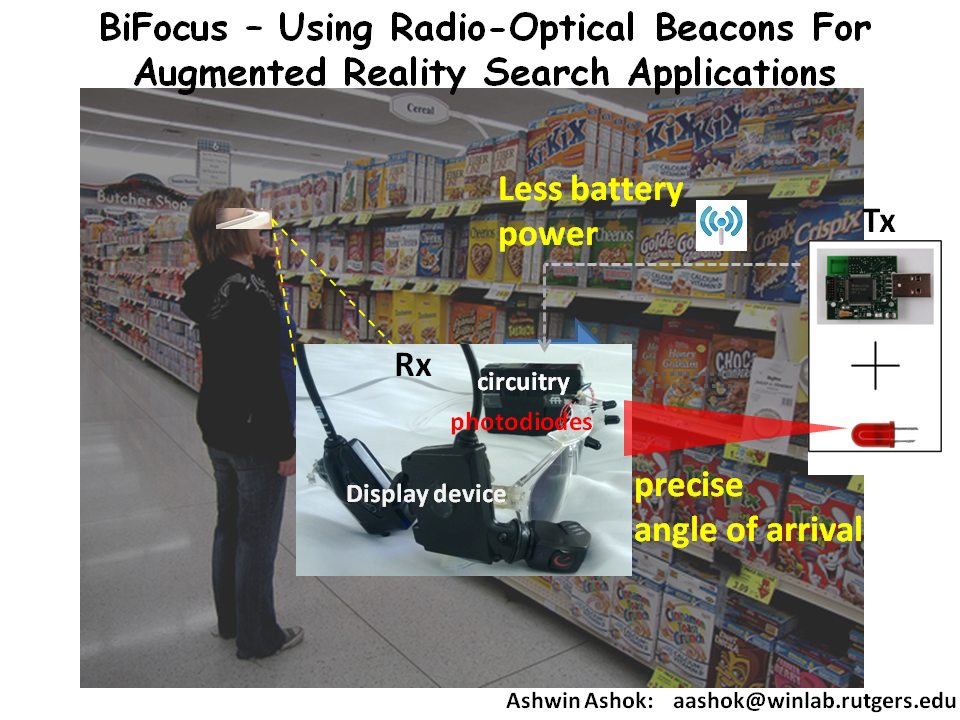

BiFocus: Fine-grained Positioning through Radio synchronized Optical link

Accurately estimating relative angles and ranges information between nearby devices is a

hard problem, yet of importance to many context-aware applications such as augmented reality, autonomous

automotive systems, smart manufacturing systems, etc. Existing indoor localization techniques could not

meet the applications' requirements given the resource constraints. We have started exploring a technique

that integrates wireless wearable devices with hardware adjuncts to provide spatial context information

from objects and people for indoor environment with high accuracy.

Links: Mobisys'13 demo [paper][prelim video]

|

| |

|

|

Time-of-Flight camera based Communication

Time-of-Flight cameras or depth-sensing cameras like Microsoft's Kinect have become popular

due to their depth-sensing capability -- "how far is the object from the camera".

We are exploring techniques to use such cameras for communication in lines of visual MIMO.

(more information coming soon).

|