Introduction

Hello and Welcome to the our project page!!!

The goal of our project is to create a practical application with the Microsoft Kinect Sensor. Before diving into details about our project, here is an introduction to the hardware and software used in this project.

The Kinect Sensor

How the Kinect Works

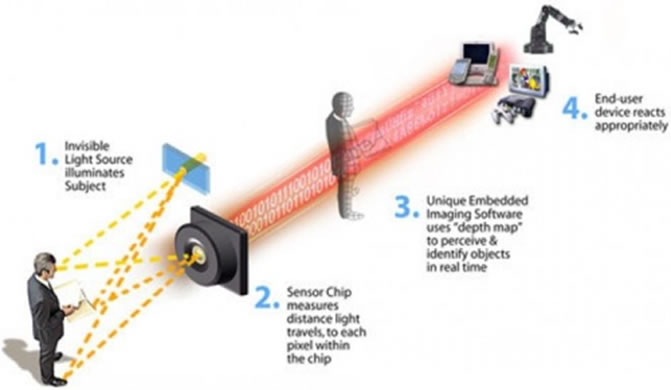

The Microsoft Kinect is a hands free motion sensing device which coupled with the Xbox 360, enables its users to play video games with the aid of a controller. The Kinect sensor is able to decipher the shapes of people and objects in a 3d space, using the help of infrared frequencies of light that are sent from the sensor. These beams of light bounce back from the objects onto the sensor’s camera. Next, the camera encodes this information onto a chip integrated into the camera. The program encoded in this chip then starts deciphering shapes that are unique to human beings such as the hands or a head. Once it is able to make out the shape of its user, the user is able to communicate with the sensor through gestures.

Tech Specs of the Kinect

Field of View

Horizontal field of view: 57 degrees

Vertical field of view: 43 degrees

Physical tilt range: ± 27 degrees

Depth sensor range: 1.2m – 3.5m

Data Streams

Depth Camera: 320×240 16-bit @ 30 frames/sec using monochrome video stream sensing at 16-bit QVGA resolution (320×240 pixels with 65,536 levels of sensitivity).

640×480 32-bit color@ 30 frames per sec

16-bit audio @ 16 kHz

Resolution

640×480 pixels @ 30 Hz (RGB camera)

640×480 pixels @ 30 Hz (IR depth-finding camera)

Connectivity

USB 2.0

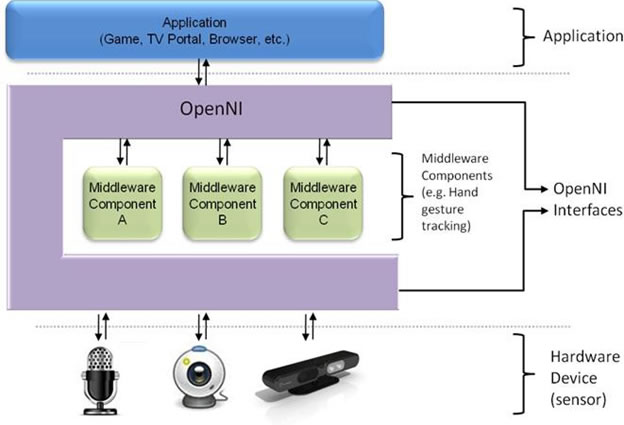

OpenNI

OpenNI is a non-profit organization established by Prime Sense, the makers of the raw technology behind the Kinect. OpenNI, through its website, provides open source drivers for the Kinect, which make it easier of software developers and researchers to program the Kinect through their computers. This framework provided by OpenNI contains low level hardware support in the form of drivers for actual cameras and other sensors, and also provides advanced visual tracking of a user onto a virtual 3D skeleton. Thus, OpenNI provides a communicating interface that interacts with both the sensors and the middleware components that analyze the data captured by the hardware device. As illustrated by the following figure: